k3s部署高可用雷池社区版

作者:约苗-运维师傅-候天鸿(社区32群)

部署前言

长亭科技的雷池开源WAF(Web Application FireWalld)是一款足够简单、足够好用、足够强的免费 WAF。基于业界领先的语义引擎检测技术,作为反向代理接入,保护你的网站不受黑客攻击。

至文档编写时官方开源版本与专业版并不支持HA高可用与集群模式部署。因需在生产环境部署故考虑架构必须至少是HA高可用,而官方不支持,仅在社区中发现有人编写过K8S集群中通过HelmChart的部署方案。

耗时长达一个月对开源版本的测试部署,最终部署方案采用在k3s集群之上采用HelmChart部署,Chart中镜像完全采用官方原镜像不做任何改动。

尽管如此,运行在k3s集群中的雷池WAF服务有且只能有一个POD副本,如有多个副本会导致某些功能异常,如:控制面板首页无访问计数变动、业务能正常转发但入侵行为无法正常检测与拦截。

目前此文档仅实现了WAF服务运行在K3S集群多个节点上,如有节点宕机,节点上的服务可自动切换至其它节点继续运行,并未实现服务多POD副本负载均衡。

雷池WAF官方网站: https://waf-ce.chaitin.cn/

雷池WAF官方GITHUB: https://github.com/chaitin/SafeLine

雷池WAF第三方HelmChart仓库: https://github.com/jangrui/charts

雷池WAF第三方HelmChart源码仓库: https://github.com/jangrui/SafeLine

个人腾讯Coding的雷池HelmChart仓库: https://g-otkk6267-helm.pkg.coding.net/Charts/safeline

部署前准备

准备3台服务器,配置在4C8G,生产环境建议采用8C16G及以上配置,操作系统为Ubuntu22.04,作为K3S集群的服务器。在K3S集群的基础上部署雷池WAF。当前文档配置信息如下:

| 主机名 | 主机IP | 用途 |

|---|---|---|

| waf-lan-k3s-master | 192.168.1.9 | K3S集群的master节点 |

| waf-lan-k3s-node1 | 192.168.1.7 | K3S集群的node1节点 |

| waf-lan-k3s-node2 | 192.168.1.8 | K3S集群的node2节点 |

部署K3S集群

部署k3s-Master节点服务

脚本部署master节点服务

安装k3s版本对标k8s-1.28.8版本,在部署同时禁用自带的traefik网关��与local-storage的存储类型

curl -sfL https://rancher-mirror.rancher.cn/k3s/k3s-install.sh | INSTALL_K3S_MIRROR=cn INSTALL_K3S_VERSION=v1.28.8+k3s1 sh -s - --disable traefik --disable local-storage

kubectl get nodes -owide #查看集群信息

kubectl get pod -A #查看集群pod启动情况

参照官方部署文档如下: https://docs.k3s.io/zh/quick-start https://docs.k3s.io/zh/installation/configuration 可部署升级的k3s版本,请查询官方如下地址: https://update.k3s.io/v1-release/channels

安装完毕后会有如下实用工具: kubectl、crictl、ctr、k3s-killall.sh 和 k3s-uninstall.sh

调整集群kubeconfig文件

- k3s默认的kubeconfig文件存放路径:

ls -l /etc/rancher/k3s/k3s.yaml

- 解决k3s下默认无/root/.kube/config文件问题

echo "export KUBECONFIG=/etc/rancher/k3s/k3s.yaml" >> /etc/profile

source /etc/profile

mkdir -p /root/.kube/

ln -sf /etc/rancher/k3s/k3s.yaml /root/.kube/config

cat /root/.kube/config #查看kubeconfig文件内容

- 将Master节点配置上一个污点,使其不可调度

kubectl taint nodes waf-lan-k3s-master node-role.kubernetes.io/control-plane:NoSchedule

# 查看是否设置成功

kubectl describe nodes waf-lan-k3s-master |grep Taints

# 命令显示内容如下:

Taints: node-role.kubernetes.io/control-plane:NoSchedule

配置k3s集群中的私有仓库信息

默认k3s集群中containerd中配置docker镜像的私有仓库地址为空,加速地址为默认的dockerhub仓库域名,必须手动进行指定才能正常拉取私有仓库中的容器镜像与使用国内镜像加速源。

k3s集群中自定义私有仓库地址,需要在master节点服务器上的/etc/rancher/k3s目录中新建registries.yaml文件。

在此文档中registries.yaml文件内容如下(注意:此文件中的密码值不是真实存在的):

mirrors:

"docker.io":

endpoint:

- "http://192.168.1.138:5000"

- "https://tshf4sa5.mirror.aliyuncs.com"

"harbor.scmttec.com":

endpoint:

- "https://harbor.scmttec.com"

configs:

"harbor.scmttec.com":

auth:

username: "robot$pull_image"

password: "b9RCmoH5vN5ZA0"

tls:

insecure_skip_verify: false

文件存放好以后,重启k3s服务使配置生效

systemctl restart k3s

安装helm工具

- helm官方下载工具压缩包并解压安装

下载页面:https://github.com/helm/helm/releases

下载地址:https://get.helm.sh/helm-v3.12.0-linux-amd64.tar.gz

wget https://get.helm.sh/helm-v3.12.0-linux-amd64.tar.gz

tar zxf helm-v3.12.0-linux-amd64.tar.gz

cp ./linux-amd64/helm /usr/local/bin/

rm -rf ./linux-amd64/ #删除解压出来的目录

- 配置helm命令和kubectl命令的自动补全功能

echo "source <(kubectl completion bash)" >> /etc/profile #添加kubectl命令自动补全

echo "source <(helm completion bash)" >> /etc/profile #添加helm命令自动补全

source /etc/profile #让功能立即生效

- 查看helm命令是否能正常使用

helm list -n kube-system

#显示如下信息:

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

traefik kube-system 1 2023-05-29 06:39:26.751316258 +0000 UTC deployed traefik-21.2.1+up21.2.0 v2.9.10

traefik-crd kube-system 1 2023-05-29 06:39:22.816811082 +0000 UTC deployed traefik-crd-21.2.1+up21.2.0 v2.9.10

部署k3s-Node节点服务

获�取k3s-Master节点的token文件

在部署k3s-node节点服务即k3s-agent服务之前,需要获取k3s-server服务的token文件,文件位置为master节点的/var/lib/rancher/k3s/server/token文件,在master节点执行如下命令获取token值:

cat /var/lib/rancher/k3s/server/token

#显示结果如下:

K10f890abb83ce8cdf8b5dbeff8edb628d10cc53c23fa9f56152db0cf22454546bf::server:fec37548170a2affec1e3ebd1fdf708d

通过官方脚本直接安装k3s-agent服务

k3s官方有k3s-agent服务的安装脚本,通过curl直接进行安装,在安装的同时指定server即master节点的IP地址与token值。 在2台Node节点服务器上直接执行命令如下:

curl -sfL https://rancher-mirror.rancher.cn/k3s/k3s-install.sh | INSTALL_K3S_MIRROR=cn INSTALL_K3S_VERSION=v1.28.8+k3s1 K3S_URL=https://192.168.1.9:6443 K3S_TOKEN=K10f890abb83ce8cdf8b5dbeff8edb628d10cc53c23fa9f56152db0cf22454546bf::server:fec37548170a2affec1e3ebd1fdf708d sh -

执行后在master节点通过kubectl命令查看新加节点状态:

kubectl get nodes -owide

# 显示结果如下:

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

waf-lan-k3s-master Ready control-plane,master 17d v1.28.8+k3s1 192.168.1.9 <none> Ubuntu 22.04.1 LTS 5.15.0-69-generic containerd://1.7.11-k3s2

waf-lan-k3s-node2 Ready <none> 17d v1.28.8+k3s1 192.168.1.8 <none> Ubuntu 22.04.1 LTS 5.15.0-69-generic containerd://1.7.11-k3s2

waf-lan-k3s-node1 Ready <none> 17d v1.28.8+k3s1 192.168.1.7 <none> Ubuntu 22.04.1 LTS 5.15.0-69-generic containerd://1.7.11-k3s2

至此K3S集群基础服务已成功部署完毕!

安装k3s集群存储与网关组件

安装Nginx-Ingress组件

通过nginx-ingress官方github站点获取最新安装版本及文件,并对官方部署文件进行修改后得出最终文件nginx-ingress-v1.10.0.yaml。

官方github仓库地址: https://github.com/kubernetes/ingress-nginx

官方对裸机部署注意事项: https://github.com/kubernetes/ingress-nginx/blob/controller-v1.10.0/docs/deploy/baremetal.md

官方裸机部署文件地址: https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.8.2/deploy/static/provider/baremetal/deploy.yaml

修改后的nginx-ingress-v1.10.0.yaml部署文件内容如下:

apiVersion: v1

kind: Namespace

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

name: ingress-nginx

---

apiVersion: v1

automountServiceAccountToken: true

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- coordination.k8s.io

resourceNames:

- ingress-nginx-leader

resources:

- leases

verbs:

- get

- update

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- create

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx-admission

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- secrets

verbs:

- get

- create

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

verbs:

- list

- watch

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx-admission

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx-admission

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx-admission

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: v1

data:

allow-snippet-annotations: "false"

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx-controller

namespace: ingress-nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- appProtocol: http

name: http

port: 80

protocol: TCP

targetPort: http

- appProtocol: https

name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

#type: NodePort

type: ClusterIP

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

ports:

- appProtocol: https

name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: ClusterIP

---

apiVersion: apps/v1

#kind: Deployment

kind: DaemonSet

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

#strategy:

# rollingUpdate:

# maxUnavailable: 1

# type: RollingUpdate

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

spec:

hostNetwork: true

dnsPolicy: ClusterFirst

containers:

- args:

- /nginx-ingress-controller

- --publish-service=$(POD_NAMESPACE)/ingress-nginx-controller

- --election-id=ingress-nginx-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

#image: registry.k8s.io/ingress-nginx/controller:v1.10.0@sha256:42b3f0e5d0846876b1791cd3afeb5f1cbbe4259d6f35651dcc1b5c980925379c

image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.10.0

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: controller

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8443

name: webhook

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

requests:

cpu: 100m

memory: 90Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

readOnlyRootFilesystem: false

runAsNonRoot: true

runAsUser: 101

seccompProfile:

type: RuntimeDefault

volumeMounts:

- mountPath: /usr/local/certificates/

name: webhook-cert

readOnly: true

dnsPolicy: ClusterFirst

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx-admission-create

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx-admission-create

spec:

containers:

- args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

#image: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v1.4.0@sha256:44d1d0e9f19c63f58b380c5fddaca7cf22c7cee564adeff365225a5df5ef3334

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.4.0

imagePullPolicy: IfNotPresent

name: create

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 65532

seccompProfile:

type: RuntimeDefault

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx-admission-patch

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx-admission-patch

spec:

containers:

- args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

#image: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v1.4.0@sha256:44d1d0e9f19c63f58b380c5fddaca7cf22c7cee564adeff365225a5df5ef3334

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.4.0

imagePullPolicy: IfNotPresent

name: patch

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 65532

seccompProfile:

type: RuntimeDefault

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

---

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: nginx

spec:

controller: k8s.io/ingress-nginx

---

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.10.0

name: ingress-nginx-admission

webhooks:

- admissionReviewVersions:

- v1

clientConfig:

service:

name: ingress-nginx-controller-admission

namespace: ingress-nginx

path: /networking/v1/ingresses

failurePolicy: Fail

matchPolicy: Equivalent

name: validate.nginx.ingress.kubernetes.io

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

sideEffects: None

在master节点直接apply此文件进行部署

kubectl apply -f nginx-ingress-v1.10.0.yaml

查看并核实是否部署成功

kubectl get pod -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-7gkvs 0/1 Completed 0 6d2h

ingress-nginx-admission-patch-lc9zc 0/1 Completed 0 6d2h

ingress-nginx-controller-dwzm4 1/1 Running 0 6d2h

ingress-nginx-controller-47hr2 1/1 Running 0 6d2h

在节点上查看是否生效

netstat -ntpl

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 80515/nginx: master

tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 80515/nginx: master

tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 80515/nginx: master

tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 80515/nginx: master

tcp 0 0 127.0.0.1:10010 0.0.0.0:* LISTEN 13145/containerd tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 80515/nginx: master

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 80515/nginx: master

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 80515/nginx: master

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 80515/nginx: master

............

注意nginx-ingress部署完毕后master节点因有污点,故默认无法暴露80与443端口,仅有集群节点可以使用。

至此nginx-ingress服务成功部署完毕。

通过HelmChart安装nfs-provisioner组件

nfs-subdir-external-provisioner服务为K8S或K3S集群自动挂载NFS目录,作为集群后端持久化数据存储使用的一个第三方组件。

本文中将采用HelmChart的方式进行部署并生成集群的stroage-class存储类型。

增加helm的公共远程仓库并部署

#查看helm的版本号

helm version

#列出所有已添加的helm仓库列表

helm repo list

#增加nfs-subdir的公共helm仓库

helm repo add nfs-subdir-external-provisioner https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner/

#查看所有helm仓库

helm repo list

NAME URL

nfs-subdir-external-provisioner https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner/

#更新helm仓库信息

helm repo update

查看HelmChart中NFS相关的版本信息

#查看aliyun-app-hub仓库中是否有chart

helm search repo nfs-subdir-external-provisioner|grep nfs-subdir-external-provisioner

nfs-subdir-external-provisioner/nfs-subdir-exte... 4.0.10 4.0.2 nfs-subdir-external-provisioner is an automatic...

#注释:从以上信息可看出存在nfs-client-provisioner的chart

#安装nfs命令客户端

apt install -y nfs-common

#注意:如果要使用NFS作为后端存储,已Ubuntu系统为例,所有集群节点都必须安装NFS客户端,否则无法进行挂在NFS存储

安装nfs-client-provisioner

helm install --namespace kube-system nfs-subdir-external-provisioner nfs-subdir-external-provisioner/nfs-subdir-external-provisioner \

--set nfs.server=192.168.1.103 \

--set nfs.path=/nfs_data/waf-lan-k3s-data \

--set image.repository=registry.cn-hangzhou.aliyuncs.com/k8s_sys/nfs-subdir-external-provisioner \

--set image.tag=v4.0.2 \

--set storageClass.name=cfs-client

--set storageClass.defaultClass=ture

--set tolerations=[{tolerations[0].operator=Exists}{tolerations[0].effect=NoSchedule}]

注释:通过helm部署名为nfs-client-provisioner的helm-chart,部署到集群的kube-system命令空间中,集群的存储类型名称为:cfs-client

参数选项:

nfs.server为nfs服务器的IP地址

nfs.path为NFS服务器共享出来的目录路径

storageClass.name为设置集群存储类型的名称

storageClass.defaultClass为是否设置为集群的默认存储类型

tolerations为设定此服务允许在禁止调度的节点上运行,如master节点

查看部署后的服务

kubectl get pod -n kube-system

# 显示信息如下:

NAME READY STATUS RESTARTS AGE

nfs-subdir-external-provisioner-6f5f6d764b-2z2ns 1/1 Running 3 (6d22h ago) 17d

...........

查看集群的存储类型

kubectl get sc

# 显示信息如下:

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

cfs-client (default) cluster.local/nfs-subdir-external-provisioner Delete Immediate true 17d

Helm部署雷池WAF服务

雷池WAF官方仅支持docker单机容器化部署,社区内有人提供了一个helmchart的部署方案,此文档中按照第三方的helmchart的部署方案进行部署。当前第三方的仓库在文档顶部已由链接,此处不再赘述。

master节点拉取helmchart的tgz包

直接通过命令进行拉取

cd /root/

helm repo add safeline "https://g-otkk6267-helm.pkg.coding.net/Charts/safeline"

helm repo update

helm fetch --version 5.2.0 safeline/safeline

创建values文件

直接通过命令创建values.yaml文件,文件内容如下:

detector:

image:

registry: 'swr.cn-east-3.myhuaweicloud.com/chaitin-safeline'

repository: safeline-detector

tengine:

image:

registry: 'swr.cn-east-3.myhuaweicloud.com/chaitin-safeline'

repository: safeline-tengine

集群执行helm命令安装雷池WAF

- 直接在k3s集群的master节点服务器上通过命令进行安装

cd /root/

helm install safeline --namespace safeline safeline-5.2.0.tgz --values values.yaml --create-namespace

- 直接在k3s集群的master节点服务器上通过命令进行升级

cd /root/

helm upgrade -n safeline safeline safeline-5.2.0.tgz --values values.yaml

- 查看安装后的pod运行情况

kubectl get pod -n safeline

# 显示内容如下:

NAME READY STATUS RESTARTS AGE

safeline-database-0 1/1 Running 0 21h

safeline-bridge-688c56547c-stdnd 1/1 Running 0 20h

safeline-fvm-54fbf6967c-ns8rg 1/1 Running 0 20h

safeline-luigi-787946d84f-bmzkf 1/1 Running 0 20h

safeline-detector-77fbb59575-btwpl 1/1 Running 0 20h

safeline-mario-f85cf4488-xs2kp 1/1 Running 1 (20h ago) 20h

safeline-tengine-8446745b7f-wlknr 1/1 Running 0 20h

safeline-mgt-667f9477fd-mtlpj 1/1 Running 0 20h

- 查看安装后的svc端口暴露情况

kubectl get svc -n safeline

# 显示内容如下:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

safeline-tengine ClusterIP 10.43.1.38 <none> 65443/TCP,80/TCP 15d

safeline-luigi ClusterIP 10.43.119.40 <none> 80/TCP 15d

safeline-fvm ClusterIP 10.43.162.1 <none> 9004/TCP,80/TCP 15d

safeline-detector ClusterIP 10.43.248.81 <none> 8000/TCP,8001/TCP 15d

safeline-mario ClusterIP 10.43.156.13 <none> 3335/TCP 15d

safeline-pg ClusterIP 10.43.176.51 <none> 5432/TCP 15d

safeline-tengine-nodeport NodePort 10.43.219.148 <none> 80:30080/TCP,443:30443/TCP 15d

safeline-mgt NodePort 10.43.243.181 <none> 1443:31443/TCP,80:32009/TCP,8000:30544/TCP 15d

至此helm部署雷池开源WAF服务已成功,可通过k3s-node节点的IP+safeline-mgt暴露的NodePort端口进行访问雷池WAF控制台,此文档中为:https://192.168.1.9:31443

Nginx-Ingress接入防护站点域名至WAF

Nginx-Ingress的选择说明

登录雷池开源WAF服务后,需要在防护站点中配置需要接入WAF的防护站点,以及接入WAF后的上级代理转发IP或域名。

所有的接入站点均通过safeline-tengine服务进行接入转发,因雷池WAF部署在kubernetes集群中

就算将safeline-tengine服务通过NodePort暴露出去后,如果将域名直接指向node节点的公网IP+NodePort端口,tengine服务也获取不到X-Forwarded-For的header头部信息,只能通过网络获取,而网络获取的IP仅只能显示kubernetes的内网POD的IP,不是真实的客户端公网IP。

获取不到X-Forwarded-For字段的nginx访问日志示例,以及通过网络只能获取到内网IP:10.42.1.1:

10.42.1.1 - - [16/Apr/2024:16:52:36 +0800] "www.abc.com" "GET /message-ajaxGetMessage-0.html HTTP/1.1" 200 0 "https://www.abc.com/my/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36" ""

故只能通过Nginx-Ingress来进行转发至WAF的safeline-tengine服务,此时safeline-tengine服务才能获取到X-Forwarded-For的头部信息,可以获取到真实的客户端公网IP地址,只有获取到客户端真实IP后才能做封堵以及黑白名单规则。

雷池WAF控制台中的“防护站点”-“代理配置”中“源 IP 获取方式”设置为“从HTTP Header中获取”,下面的Header值为:

X-Forwarded-For

获取到nginx-ingress中转发过来的X-Forwarded-For字段的tengine中访问日志示例:

192.168.3.45 - - [16/Apr/2024:16:52:36 +0800] "www.abc.com" "GET /message-ajaxGetMessage-0.html HTTP/1.1" 200 0 "https://www.abc.com/my/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36" "222.234.923.56"

除Nginx-Ingress可以实现客户端真实IP外,k3s自带的teafik也能实现,但需要做一定的修改,感兴趣的可自行研究此处再做赘述。

Nginx-Ingress配置举例

此文档中比如需要配置www.abc.com域名的接入,除雷池WAF的防护站点需配置外,还需要创建Nginx-Ingress关于该域名的资源清单文件。kubernetes中的资源清单详细解读,请参见kubernetes官方网站。

除Nginx-Ingress配置外,在雷池WAF控制台还需要做一些类似防护站点等配置,此处不赘述,详情参见雷池WAF官方文档。

此清单文件包含如下2个方面:

- 域名证书文件资源清单文件,即kubernetes的secret类型

文件举例(证书内容通过了base64加密后的值,示例中的值不是真实存在的):

apiVersion: v1

kind: Secret

metadata:

name: www-abc-com

namespace: safeline

type: kubernetes.io/tls

# The TLS secret must contain keys named 'tls.crt' and 'tls.key' that contain the certificate and private key to use for TLS.

data:

tls.crt: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tDQpNSUlHYXpDQ0JWT2dBd0lCQWdJUkFQeWFPRDdFM2dicFZ3a2RRZWJ4M2Iwd0RRWUpLb1pJaHZjTkFRRUxCUUF3DQpYREVMTUFrR0ExVUVCaE1DUTA0eEdqQVlCZ05WQkFvVEVWZHZWSEoxY3lCRFFTQk1hVzFwZEdWa01URXdMd1lEDQpWUVFERENoWGIxUnlkWE1nVDFZZ1UyVnlkbVZ5SUVOQklDQmJVblZ1SUdKNUlIUm9aU0JKYzNOMVpYSmRNQjRYDQpEVEl6

tls.key: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb1FJQkFBS0NBUUVBZ0FYRDRSTW44RGozdVNFOGczbVQzK3hYeDFDRzhJTURkdmdvQVB6N2gxbHVPY1lDCjdnYnUxbFFaVEphVno1YzNtcXF6YXpsK0Vtdjh2Y2hQQS9FYWI3TEVpV1A0ZUZpd0VXZFU3NVVqeTMxR3BnT2kKRnVzS1RidXhPN1gvc0ZNbmtrdDFvbjI5N1Vrc2JCNG1iV3BKa0RMbU0xUHc5bFpuK21TWmNQbXp0L3dmcW5SZgpqbGx3MDZ3M2w0eCtTRFpyd2syMVdLY2NWRExqdGp5TGNFbXlrNTIyTUIyVGhZck1uNjh5bHA5UG5vYndNTEx4CmE1WFozUkFtL0NPeTd2TG5FZXdFb

- Ingress资源清单文件,即绑定指定域名、域名证书以及Ingress后端转发svc使用

Ingress文件举例:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: www-abc-com-ingress

namespace: safeline

spec:

ingressClassName: nginx

tls:

- hosts:

- www.abc.com

secretName: scmttec-com

rules:

- host: www.abc.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: safeline-tengine

port:

number: 80

应用该配置并查看访问情况

- 通过

kubectl命令来应用以上创建的两个yaml文件

kubectl apply -f www-abc-com-secret.yaml

kubectl apply -f www-abc-com-ingress.yaml

- 通过

kubectl命令查看创建的结果

kubectl get secrets -n safeline

# 显示内容如下:

NAME TYPE DATA AGE

www-abc-com kubernetes.io/tls 2 15d

kubectl get ingress -n safeline

# 显示内容如下:

NAME CLASS HOSTS ADDRESS PORTS AGE

www-abc-com-ingress nginx www.abc.com 10.43.109.170 80, 443 7d5h

最终通过web页面访问绑定接入的域名,页面能正常访问并转发,即为配置成功!

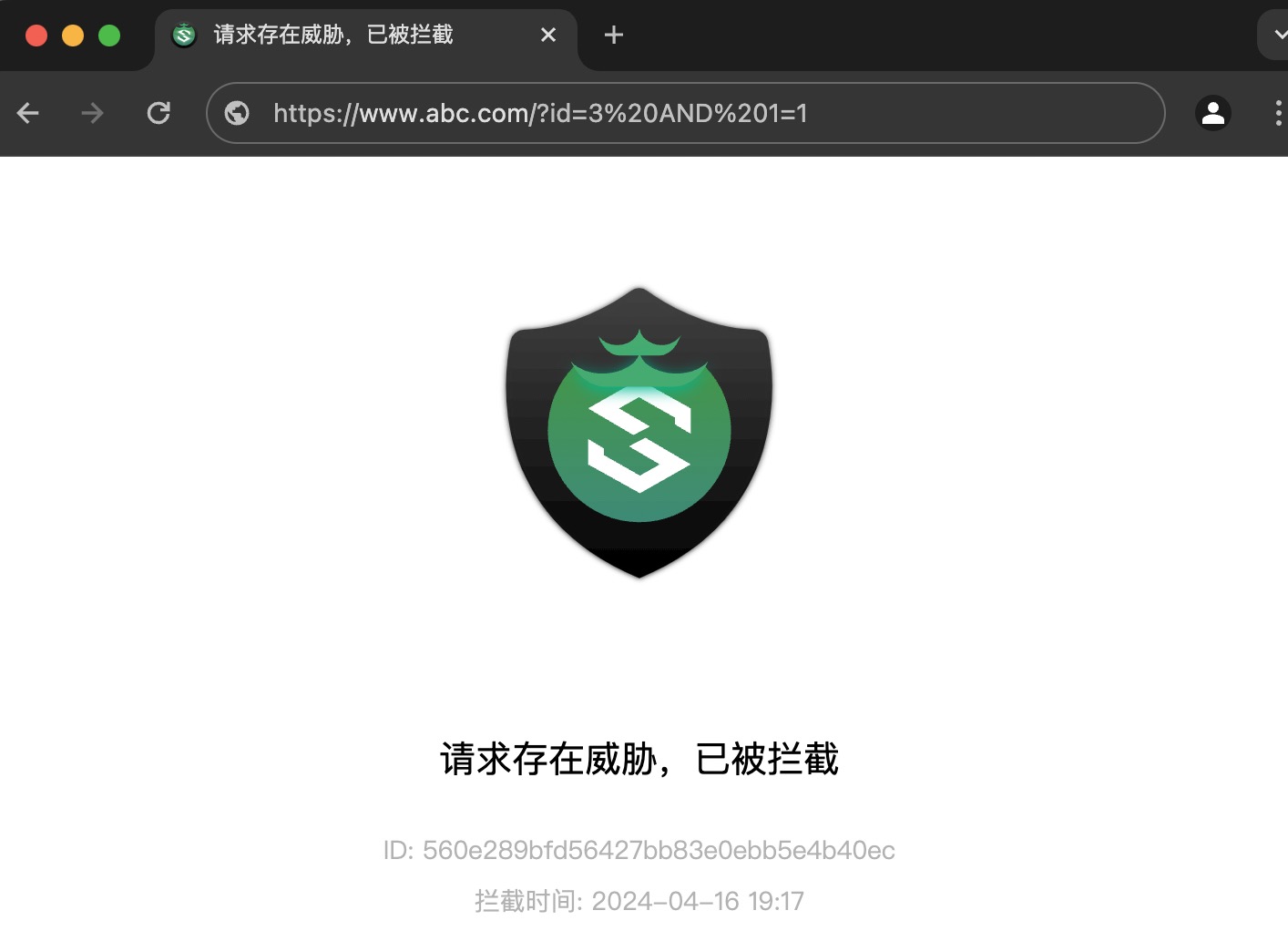

测试攻击防护

此处以www.abc.com域名为例,首先此域名需解析到WAF所在的k3s集群节点服务器IP上。

攻击语句举例:

curl -v https://www.abc.com/\?id\=3%20AND%201\=1

# 显示内容如下:

<!DOCTYPE html>

<html lang="zh">

<head>

<meta charset="utf-8" />

<meta name="viewport" content="width=device-width,initial-scale=1" />

<title>请求存在威胁,已被拦截</title>

...............

此处如显示雷池WAF标准的拦截页面提示,表示以成功拦截,同时在雷池WAF控制台中的“攻击事件”菜单中也有相应的攻击事件提醒信息。